Large generative diffusion models have revolutionized text-to-image generation and offer immense potential for conditional generation tasks such as image enhancement, restoration, editing, and compositing. However, their widespread adoption is hindered by the high computational cost, which limits their real-time application.

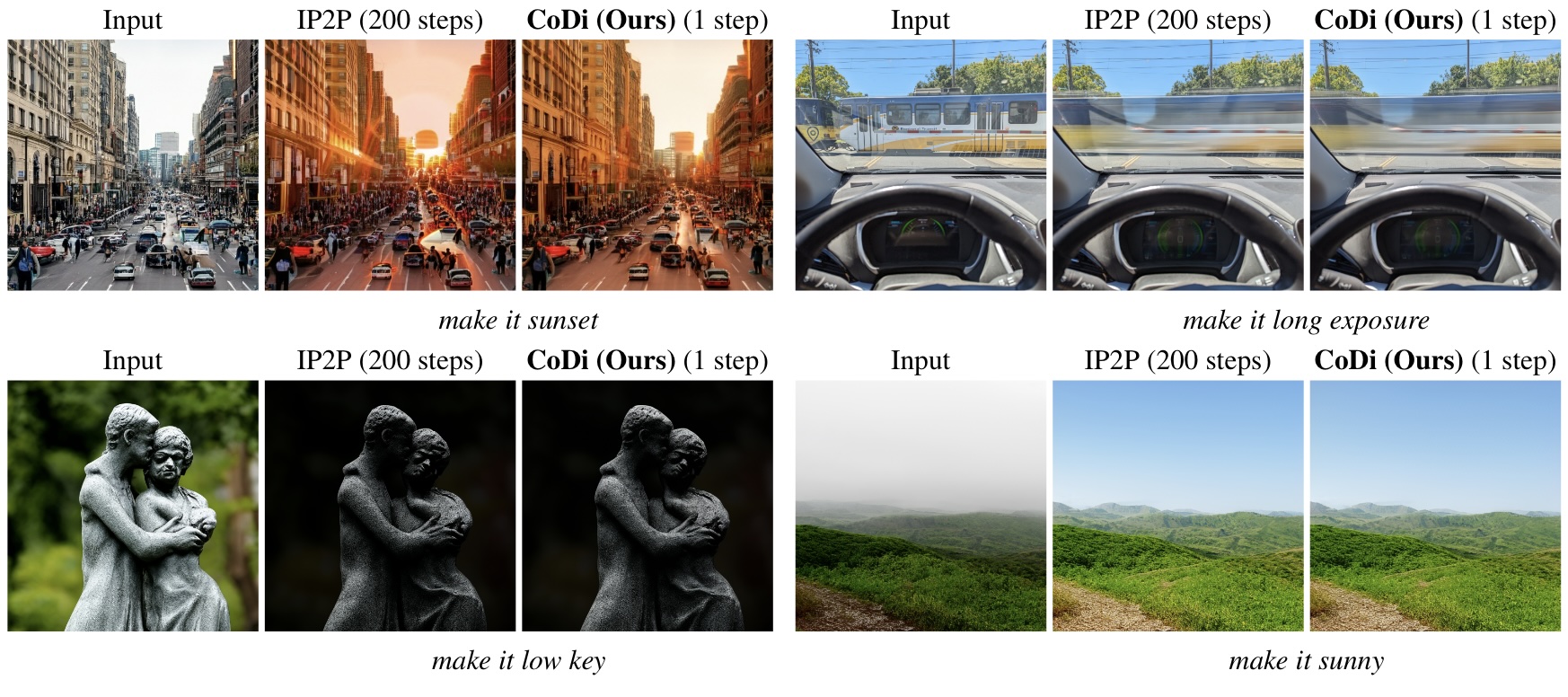

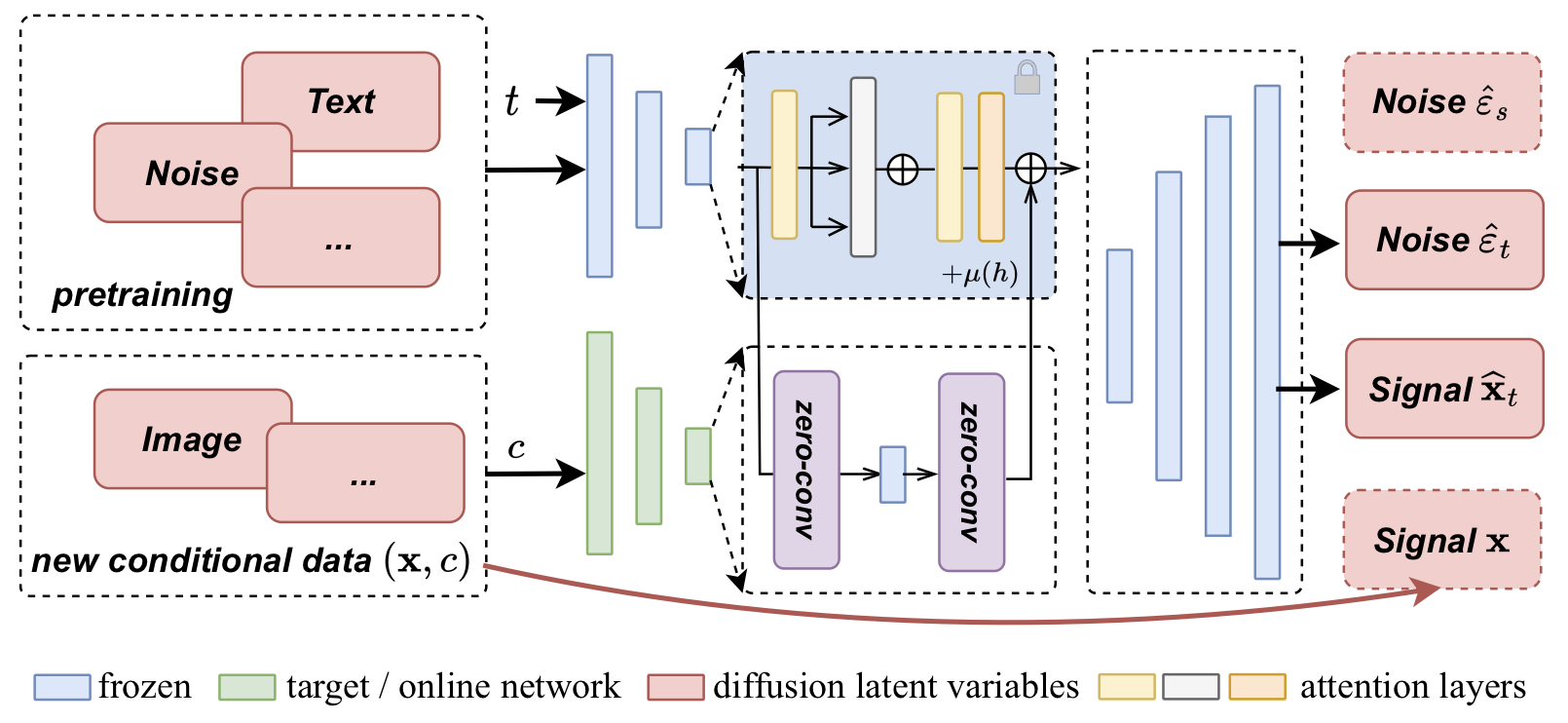

To address this challenge, we introduce a novel method dubbed CoDi, that adapts a pre-trained latent diffusion model to accept additional image conditioning inputs while significantly reducing the sampling steps required to achieve high-quality results. Our method can leverage architectures such as ControlNet to incorporate conditioning inputs without compromising the model's prior knowledge gained during large scale pre-training. Additionally, a conditional consistency loss enforces consistent predictions across diffusion steps, effectively compelling the model to generate high-quality images with conditions in a few steps.

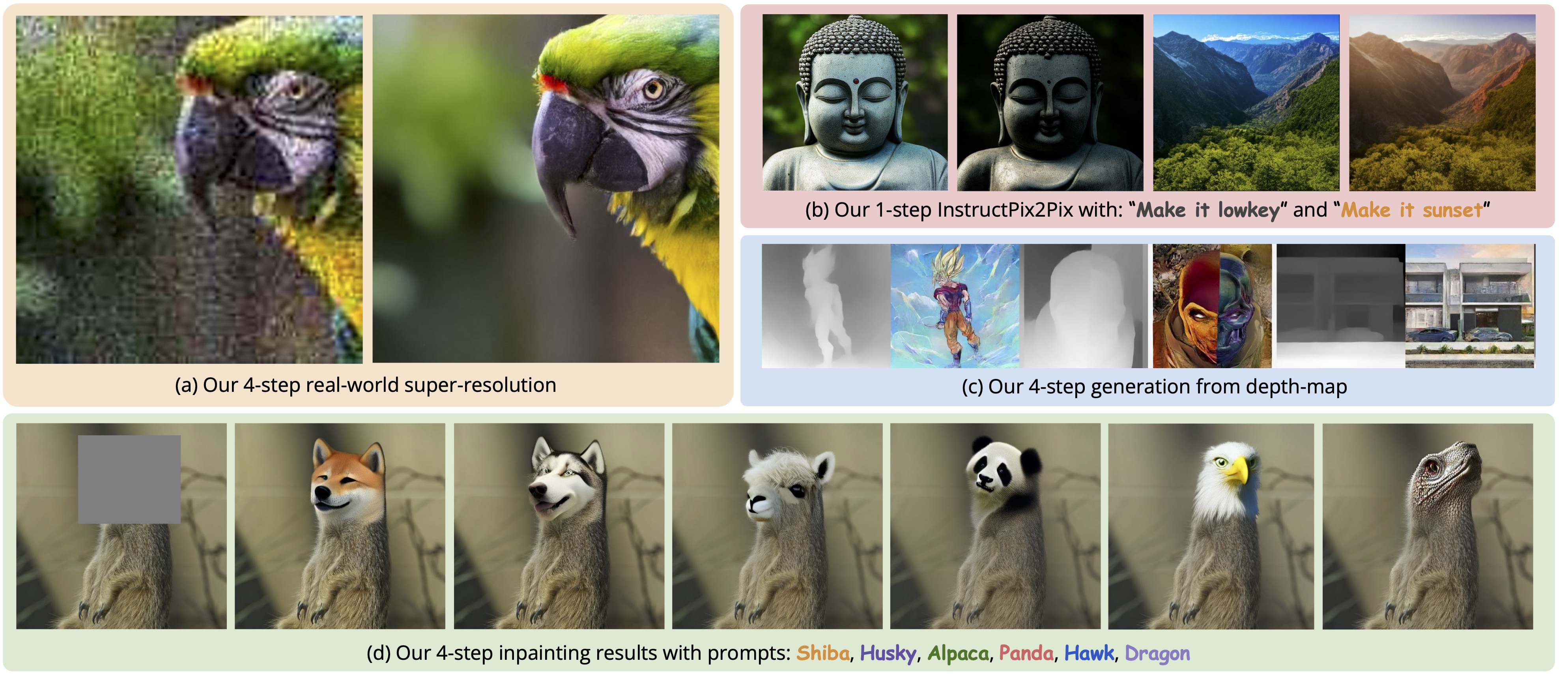

Our conditional-task learning and distillation approach outperforms previous distillation methods, achieving a new state-of-the-art in producing high-quality images with very few steps (e.g., 1-4) across multiple tasks, including super-resolution, text-guided image editing, and depth-to-image generation.

Compared with previous (two-stage) distillation methods---either distillation-first or fine-tuning-first, CoDi is the first single-stage distillation method that directly learns faster conditional sampling from a text-to-image pretraining, yiedling a fully distilled conditional diffusion model.

CoDi offers the flexibility to selectively update parameters pertinent to distillation and conditional finetuning, leaving the remaining parameters frozen, i.e., Parameters-Efficient CoDi (Pe-CoDi). This leads us to introduce a new fashion of parameter-efficient conditional distillation, aiming at unifying the distillation process across commonly-used parameter-efficient diffusion model finetuning, including ControlNet, T2I-Adapter, etc. Our method can then optimizes the conditional guidance and the consistency by only updating the duplicated encoder.

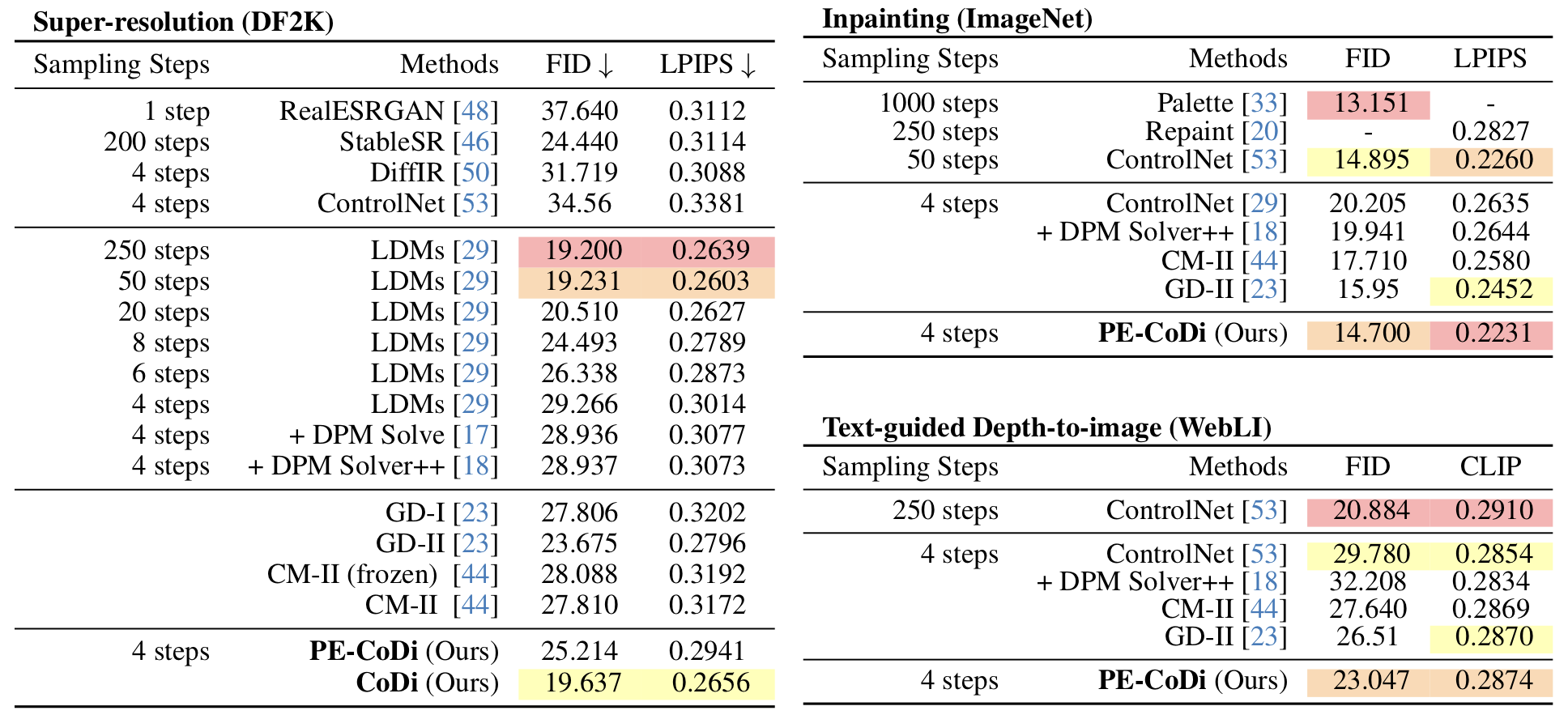

Quantitative performance comparisons between the baselines and our methods. Our model can achieve comparable performance in 4 steps than models sampled in 250 steps. The 4-step sampling results of our parameters-efficient distillation (PE-CoDi) is comparable with the original 8-step sampling results, while PE-CoDi doesn't sacrifice the original generative performance with frozen backbone.

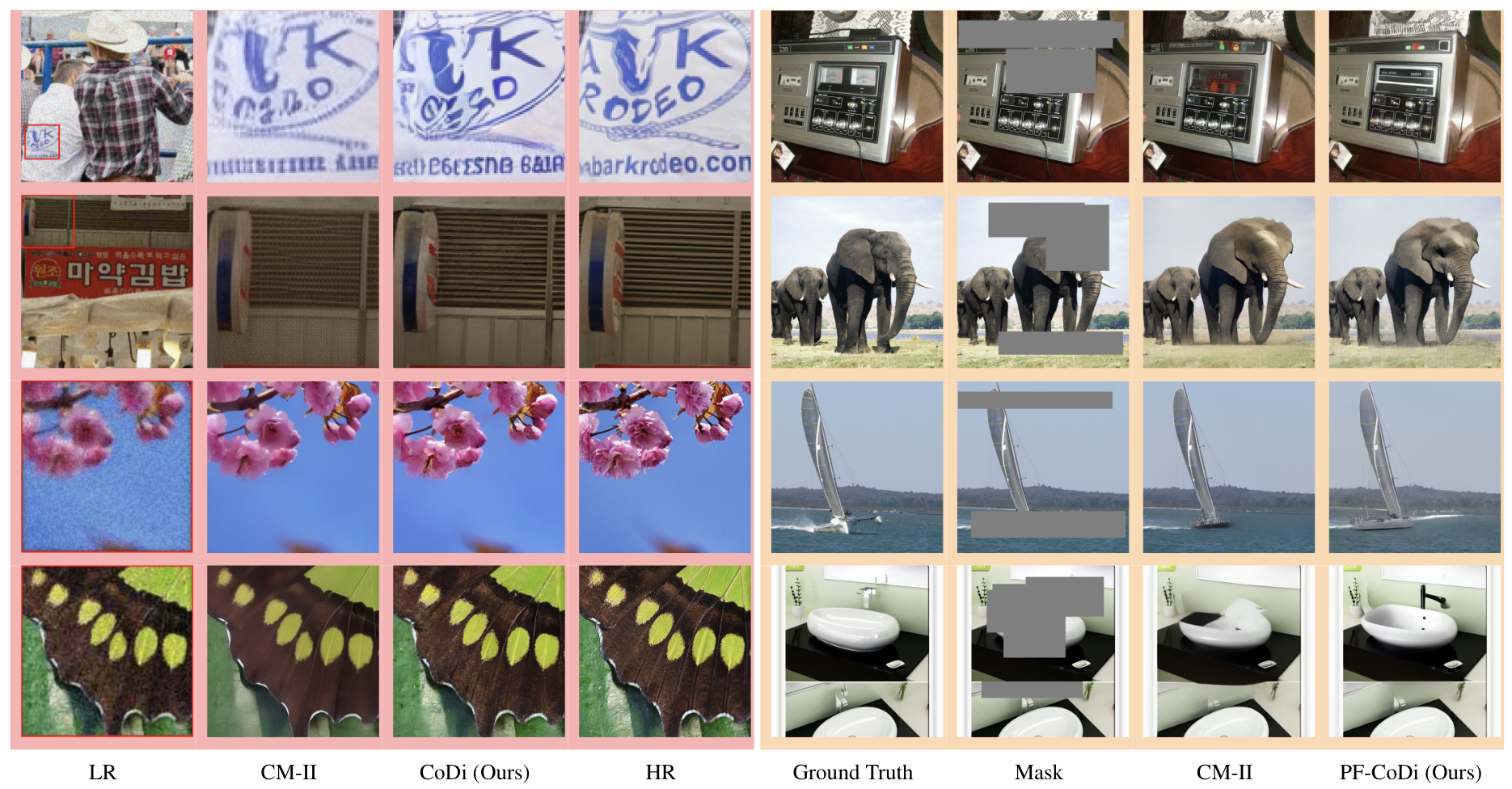

CoDi outperforms the consistency models by generating higher quality results on the super-resolution (left) and text-guided inpainting (right) benchmarks.

The authors would like to thank our colleagues Keren Ye and Chenyang Qi for reviewing the manuscript and providing valuable feedback. We also extend our gratitude to Shlomi Fruchter, Kevin Murphy, Mohammad Babaeizadeh, and Han Zhang for their instrumental contributions in facilitating the initial implementation of the latent diffusion models.

@inproceedings{mei2024conditional,

title={CoDi: Conditional Diffusion Distillation for Higher-Fidelity and Faster Image Generation},

author={Mei, Kangfu and Delbracio, Mauricio and Talebi, Hossein and Tu, Zhengzhong and Patel, Vishal M and Milanfar, Peyman},

year={2024},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

}